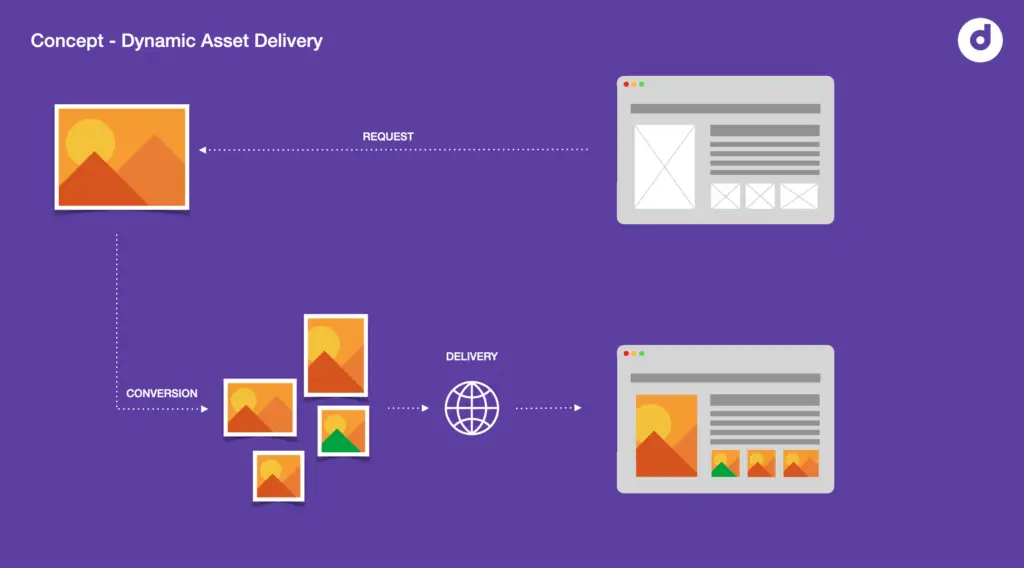

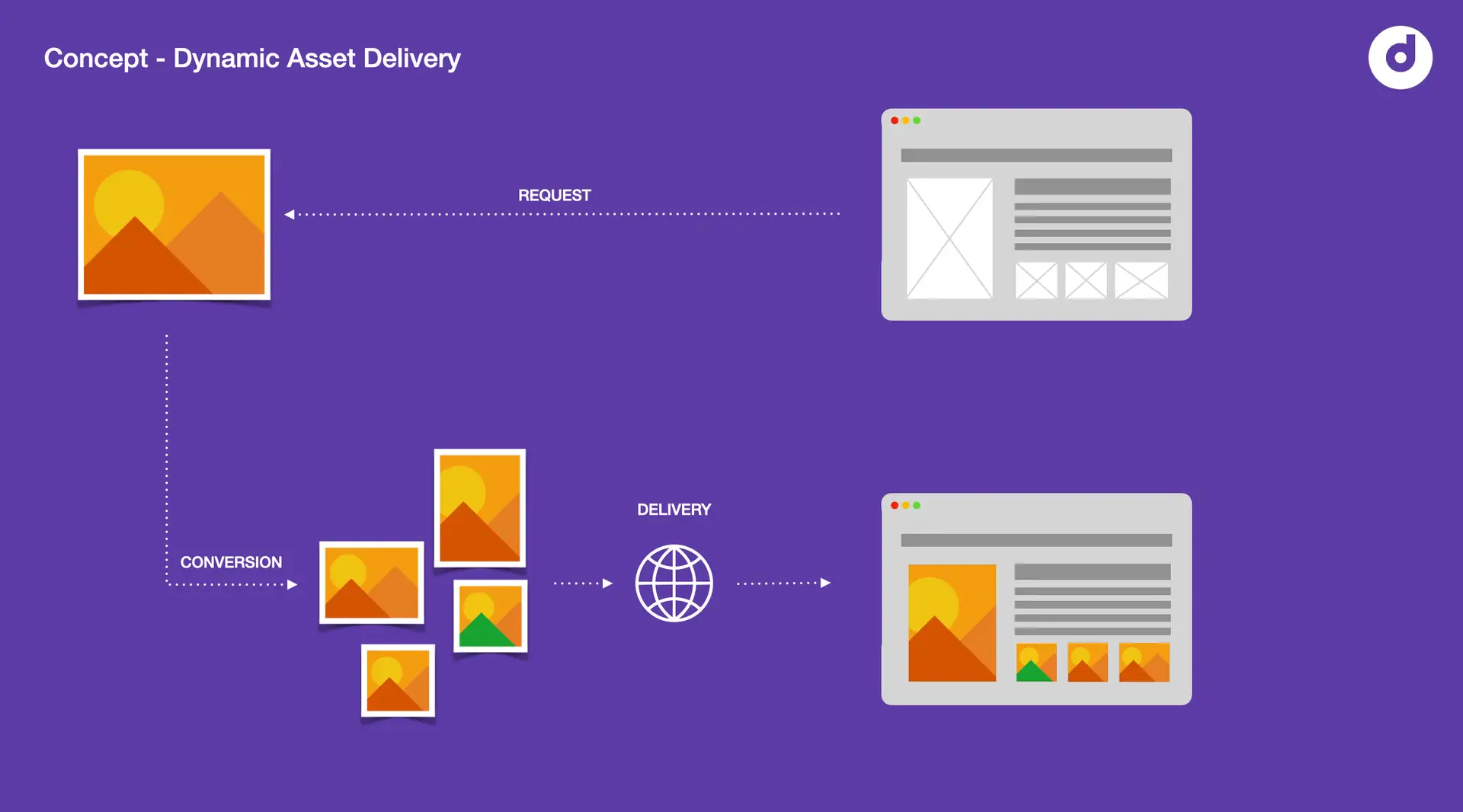

Dynamic Asset Delivery Services have become increasingly important, if not essential, with the growing importance of e-commerce sites in a customer centric world when it comes to delivering assets managed in a DAM system to customer facing webshops. These solutions help minimizing the time it takes to load webshop pages, because any image or video on a page is not pulled form a static asset storage container, but instead dynamically generated at the right size, colorspace, dimension and format at the time the page loads. Websites being fed with dynamically generated media content therefore have a huge advantage over websites with static links. In this article I’ll explain what these dynamic asset delivery solutions are, how they work and what benefits they provide.

How it works - the basics

In order to show an image or video in an optimized size and format at the time the page loads and html gets rendered by the browser, the conversion parameters need to be passed to the conversion service, the conversion service will then convert the image or video file accordingly and deliver the converted, optimized media files via a CDN URL back to the requesting html page. All in one go. The benefit here is that the conversion is offloaded to a backend conversion server and removed from the web browser that typically is being tasked to render the image in the correct canvas, the page loads much faster and the user experience is much better.

These dynamic asset delivery services accept conversion parameters via http requests or URLs and apply them to the media file in a local data store, which means that typically (although there are exceptions) the conversion service must have access to the original file. As a consequence it also means that any asset that is manaaed in a DAM needs to be pushed to the conversion service first.

Let’s take a quick look at what these conversion parameters could look like in a very simple example. Please note that the actual URL API syntax, parameter names are unique in every software. What I am showing here are simply examples and if there are any similarities with existing real solutions it is only coincidence.

So, say you request an existing image with a width of 600 pixels. This is what your request would look like. Maybe you would like to ask the conversion service to scale up images with a width of less than 600 pixels. I am making this up, but it could be a key-value pair of scale=up-600, meaning scale up if below 600 px.

https://dynamic.delivery-platform.com/media/images/assetname.jpg?w=600&scale=up-600

We’ll look at other more interesting conversion parameters later on, but generally this is how it works. Now some dynamic asset conversion or image optimization software solutions also support configuring named conversion sets, so that a consumer could call the ‘optimized’ image by submitting the conversion set name. Say the main image on your webshop’s product detail page must be 600 px wide and 800 px high, and if it is a landscape image you need it to be cropped and delivered as portrait in 600×800 pixels.

One could create. conversion set and name it ‘main-pdp’ (main-product detail page). The conversion set, named main-pdp would be configured with the following paramters:

color: sRGB

width: 600px

height: 800px

scale: up-600

crop: fp

comp: auto

format: auto

You could then request the image with the named conversion, such as:

https://dynamic.delivery-platform.com/media/images/assetname.jpg?$main-pdp$

This API request returns the image cropped to 600 px by 800 pixels, around the focal point of the image, that has been set individually and manually on the image. In more recent releases of conversion servers AI models are used to detect the main point of interest in an image and crop around it if needed.

Of course conversion parameters offered by dynamic media platforms go way beyond just adjusting dimensions of an image. If you look at the API documentation of most dynamic Asset Delivery platform vendors, you notice two things. One the API is usually very well documented. Makes sense, it is the main interface for these solutions and the bread and butter of how these solutions are typically used. Two you’ll find a growing number of API endpoints allowing image transformations within the following areas:

- colorspace

- fileformat

- dimensions

- crop, fill, scaling

- border styles

- styling effects

- …and many more

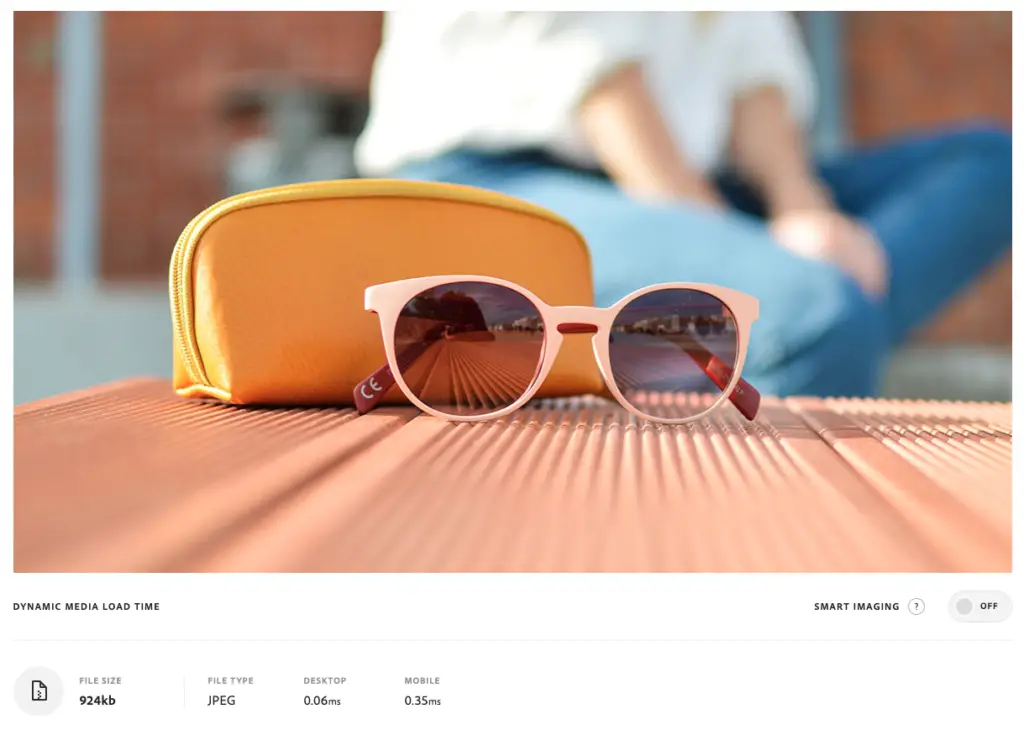

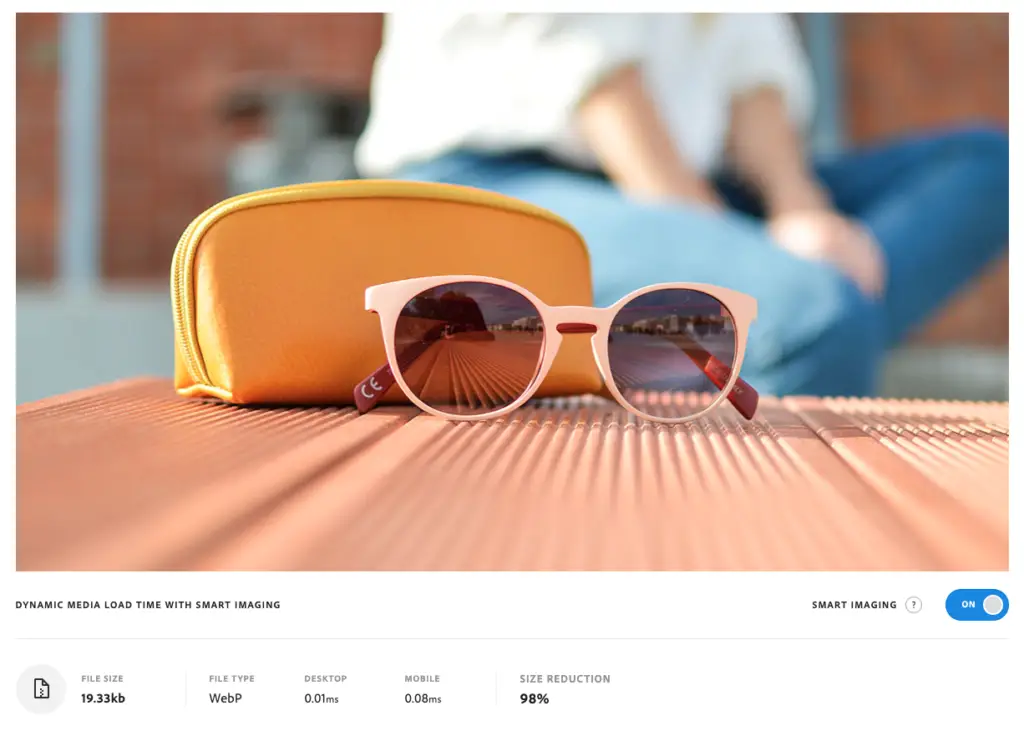

However, the main goal still remains to load images as fast as possible on a users’ web browser’s page, without loosing quality. If you think of fashion store fronts, users want to see the best quality image shots loaded in no time. This is where two area come in that play a siginificant role in dynamic media asset delivery for modern e-commerce websites. Auto-formats and Content delivery Networs (CDNs), both of which we’ll talk about in the following paragraphs.

compression - smallest filesize with the most possible detail

Most of the time images are stored and managed at their highest possible quality in the DAM system, for fear of losing too much visual quality and to make sure output channles such as print will eb able to get the quality they expect. If these images are delivered unchanged to a web page, it is close to a disaster. The files are huge and you’ll see very slow delivery speeds on the html page.

In most cases however, you can significantly reduce the image quality without a significant visual impact. Just as you should keep the best possible quality image in your DAM as a master, you should consider your image purpose and audience, and use the lowest possible quality that is acceptable for the image content, audience, and purpose.

It gets more complicated than this, but the idea behind the above is to try to find out as much as possible about where the request is coming from ( browser via user agent – different browsers support different image formats, connection speed – the lower the speed the smaller the target filesize and so on) and its effects to the requested image and its properties, such as color space, scaling paramters etc..) and then find out what format delievrs the best quality and smallest file size for that specific image.

For example a query that would offload the decision about fileformat and compression factor to the conversion service could look like this:

https://dynamic.delivery-platform.com/media/images/assetname.jpg?w=600&h=800&c=scale&format=auto&compression=auto

Most image optimization services will most likely start by choosing a modern image format such as WebP, AVIF or HEIF. These image formats have one thing in common. High compression factors combined with high image detail, so that it is almost impossible to visually notice a difference when comparing the original with a compressed version with the human eye. The problem with these new formats is that they are are not supported by all web browsers on all platforms equally. Image optimization and delivery platforms also take care of that and use a fallback mechanism to send the best possible (high compression, high detail) image to the user’s browser.

In recent times, AI models are used to analyse the image (read the image content) and then add higher compression to image areas that are less important and add lower compression to the main point of interest in the image.

Content Delivery networks

Most if not all Dynamic Media Asset Delivery services use a Content Delivery network as part of their ofering. It makes so much sense that I can’t recall having seen a single solution without a CDN offering.

There is a lot of information on the internet regarding CDNs and how they work, but I still want to explain briefly how they work and why it makes so much sense for these software platforms to make use of them.

A content delivery network (CDN) is a network of interconnected servers that speeds up the loading of web pages for data-intensive applications. CDN can stand for content delivery network or content distribution network. When a user visits a website, data from the website’s webserver must travel across the Internet to reach the user’s computer. If the user is located far from the webserver feeding the html page rendered on the user’s browser, it will take a long time for a large file, such as a video or website image, to load. Instead, the website’s content is cached on CDN servers that are geographically closer to the users (these are called edge servers) and reaches their computers much faster.

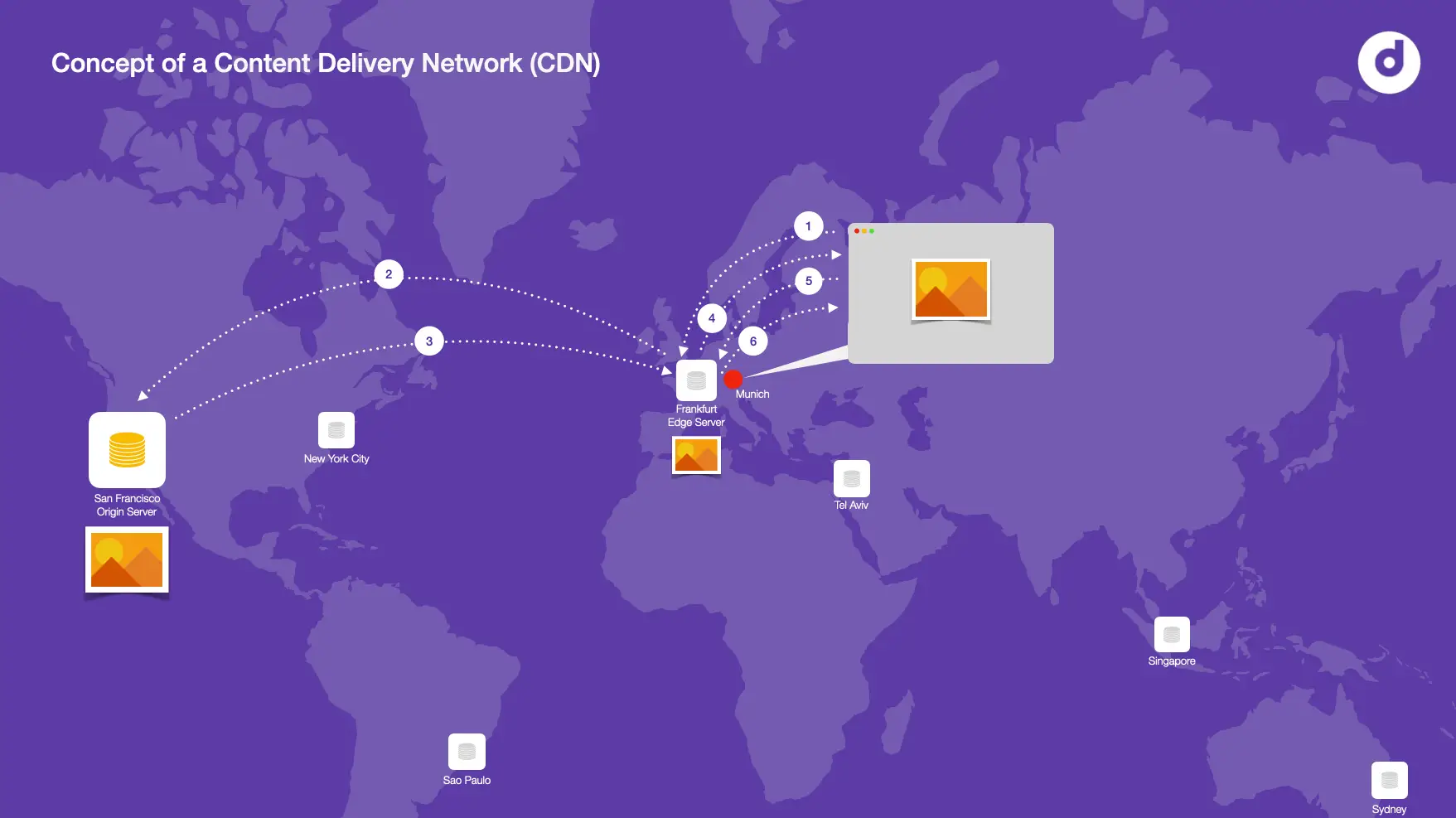

The illustration above shows the basic concept of how CDN ensure fast file distribution over long distances. Let’s take a look at the steps 1-5 one at a time.

1. A users browses a web page for the first time. The webserver serving the html page to the user’s browser finds an img tag and loads the image from its CDN URL accordingly.

2. The CDN Network checks to see if the image is available on the closest edge server. It may already be cached here. In our example the request is initiated from a user in Munich and the CDN network checks if the image is available in Frankfurt, which is geographically the closest edge server. It is not available on this edge server, so the CDN goes looking for the image on the origin server.

3. It finds and gets the image from the origin server and transfers it to the requesting edge host.

4. It then saves a copy of the requested image here (creates a cache) and transfers it along to the requesting client machine.

5. So now this is where it starts to get interesting. The next time the user browses the page that shows the same image (and let’s assume the browser no longer holds a copy of the image in its cache) it checks to see if the image is available on the edge server, just like in step (2), but this time the response is ‘Yes’. The image is available to be transferred on the closest node to the request initiator.

6. This time the server transfers the image straight from its cache (i.e the edge server that is geographically the closest). You can image it is much faster to transfer that image from a close location nearby, compared to initiating a request and transfer the image all the way from the origin server.

typical integration with DAM systems

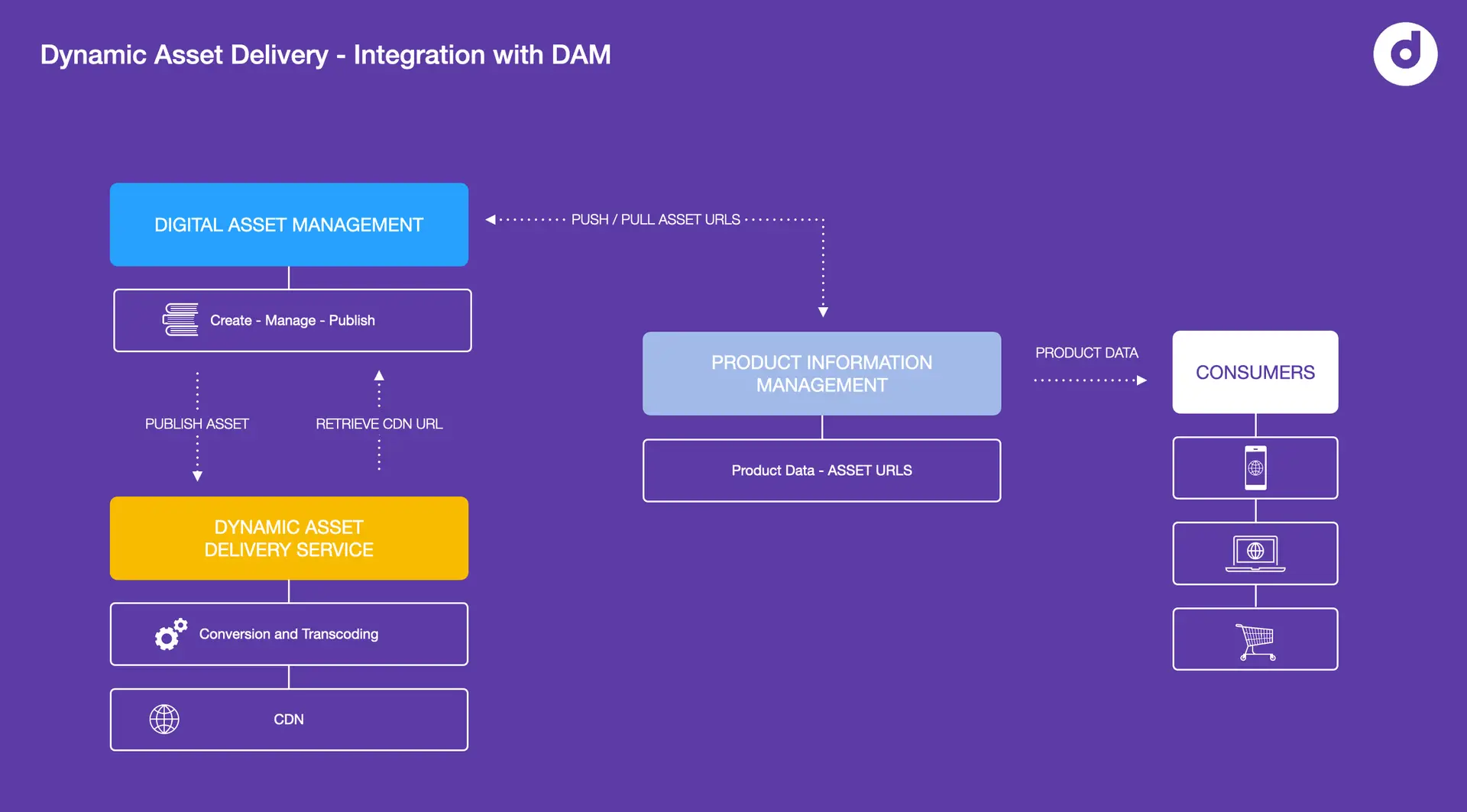

In an enterprise setup, DAM systems are always in the hear of the marketing technology stack and any media asset being used in any of the organization’s outpiut channels, be it print, solcial, web, e.com or else, should be managed in a central Digital Asset Management system. Images or videos published on the organization’s digital store fronts will have their origin in the DAM where cataloging, access management, collaboration and publishing is managed with comprehensive workflow automation services routing all assets through their life cycle in the organization.

When assets are approved to be published on a company’s e-commerce system, it only makes perfect sense that they get transferred from the DAM to downstream distribution systems.

Once the asset is published, it essentially gets transferred from the DAM data store to the Dynamic Media delivery Platform’s storage container. The transfer typically happens API. The Asset cobnversion and deliver service ingest the new asset and store it in their storage container and database, waiting to accept transformation requests via URL API.

It is important for the DAM system to know if the asset was published and when it was published and where it sits and how it can be accessed. This sis why the Public CDM URL typically gets pushed back to the DAM (again via API or other methods). This way the DAM system could be consulted from other third party system to retrievd the public CDN URL form a published asset. In the example illustrated in the image below, the PIM system gets an asset’s public URL from the DAM, possible at the time an asset is assigned to a product in the PIM. When the PIM system now publishes the product catalog to the e-com system, all public image URLs are available immediately. It depends on teh system and the configured behavior but now it is only a matter of ‘asking” the Dynamic delivery service for an image in the right size and format.

Next-Gen image manipulation using AI models

With the speed of how artificial intelligence and deep learning models influence our industry ( and humanity ) it is not suprising that manipulating images ( as in ‘editing content’) is no longer reserved to humans, but instead can be achieved to great level by machines, often outperforming manual human image editing skills.

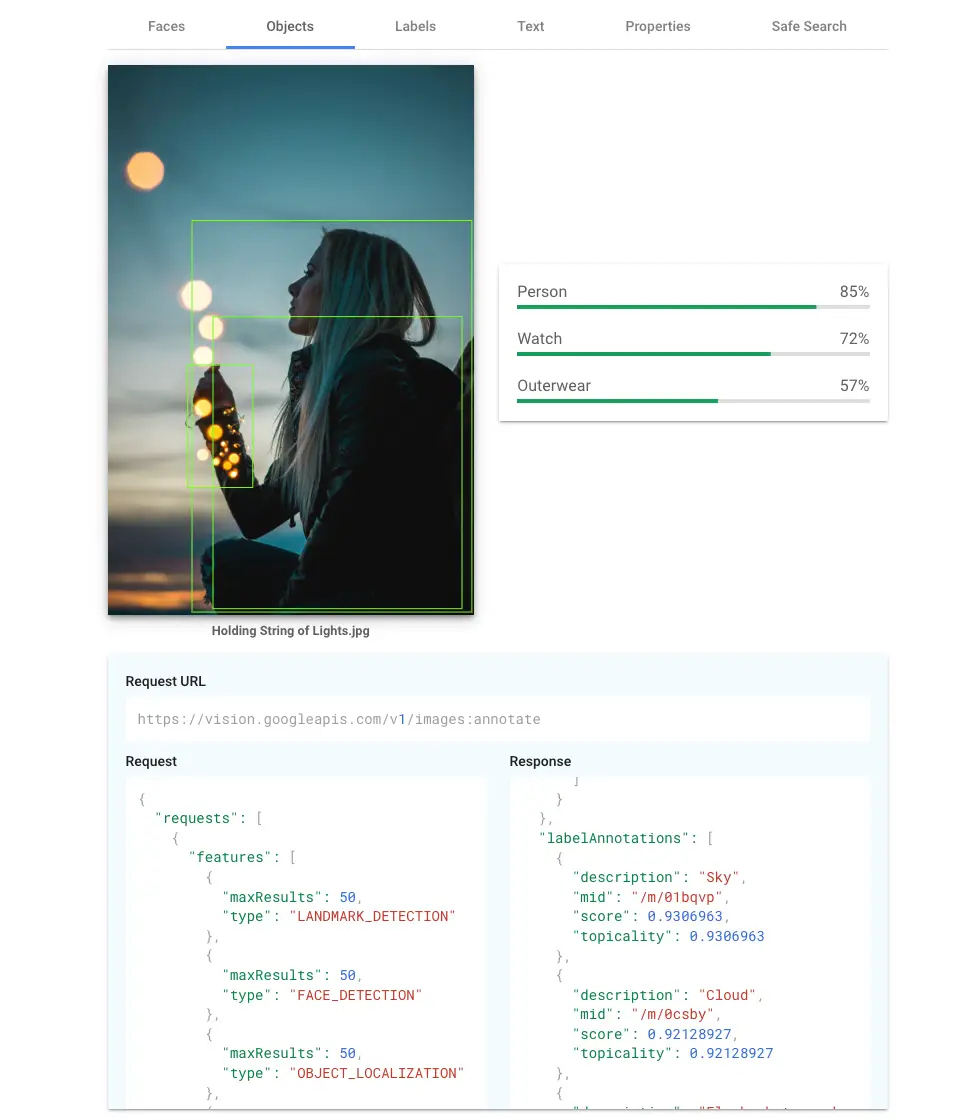

There is a lot of talk about how AI is revolutionizing the DAM space in areas including automated tagging, improved search, intelligent content recommendations and content moderation. I personally believe that if we look at the today’s state of development the most relevant change has taken place in the area of computer vision and natural language processing.

Computer Vision is an area of artificial intelligence that uses deep neural networks to analyze and manipulate image and video content. A lot of the features I have listed below are possible only because of the fantastic achievements in computer vision fields such as object detection, object recognition and segmentation.

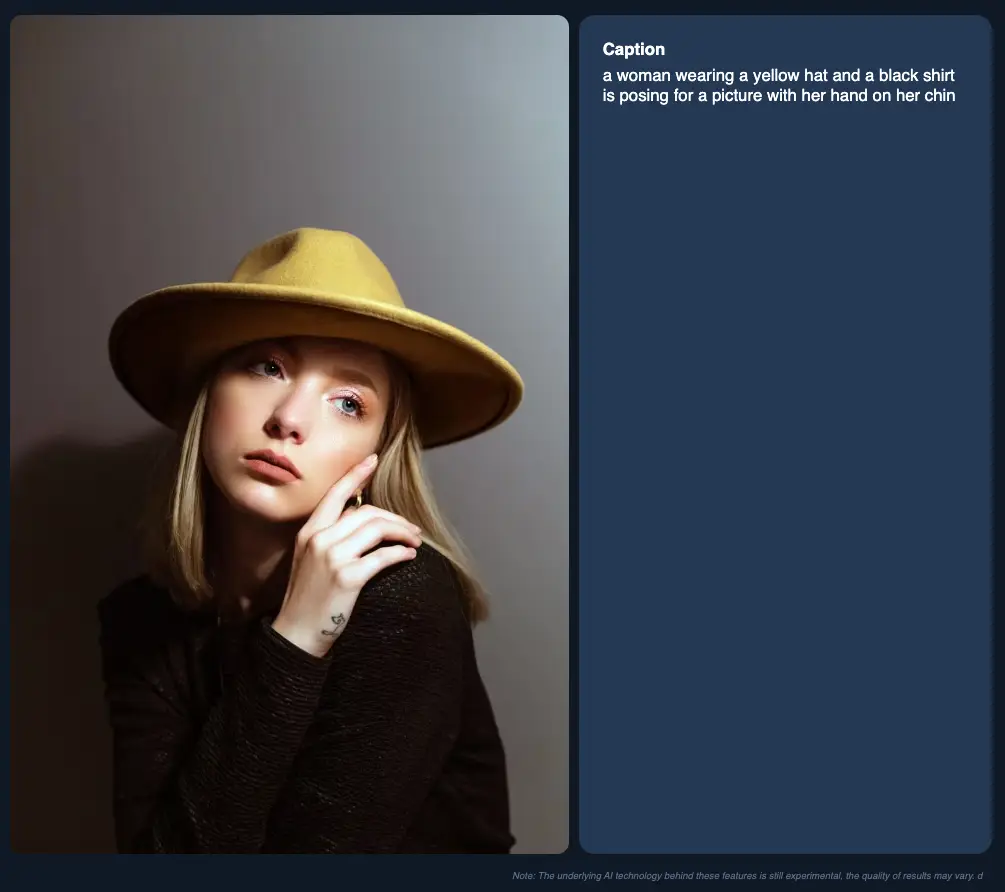

Natural language processing in the DAM space is most relevant in the area of auto-captioning, speech-to-text and overlaps with the field of machine translation that allows to automatically provide content in various languages. It also serves as a great interface to channel instructions to machine based image manipulation.

Let’s take a look at a few examples.

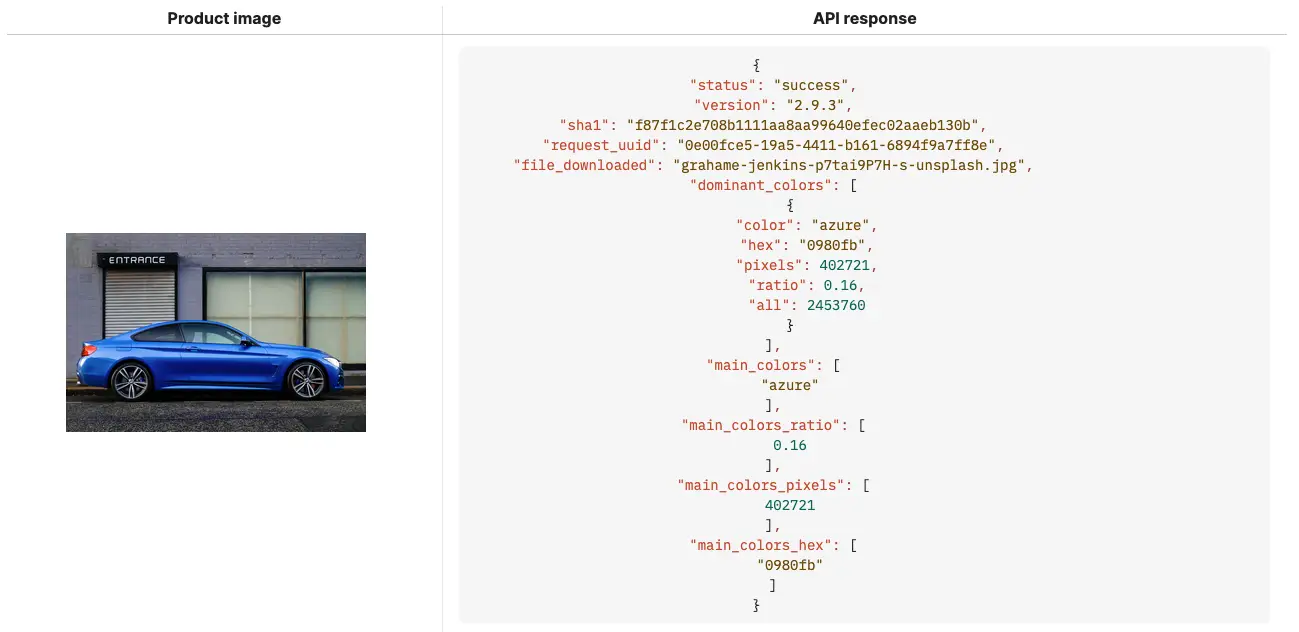

- Color palette extraction

Similar to the feature above, if an API call is submitted using an instruction to retrieve the most prominent colors in an image, the respsonse could be a list of the most used colors presentd in various colorspaces and listed in a sorted order. Consumers benefit from an improved searching and filtering (based on colors) for example.

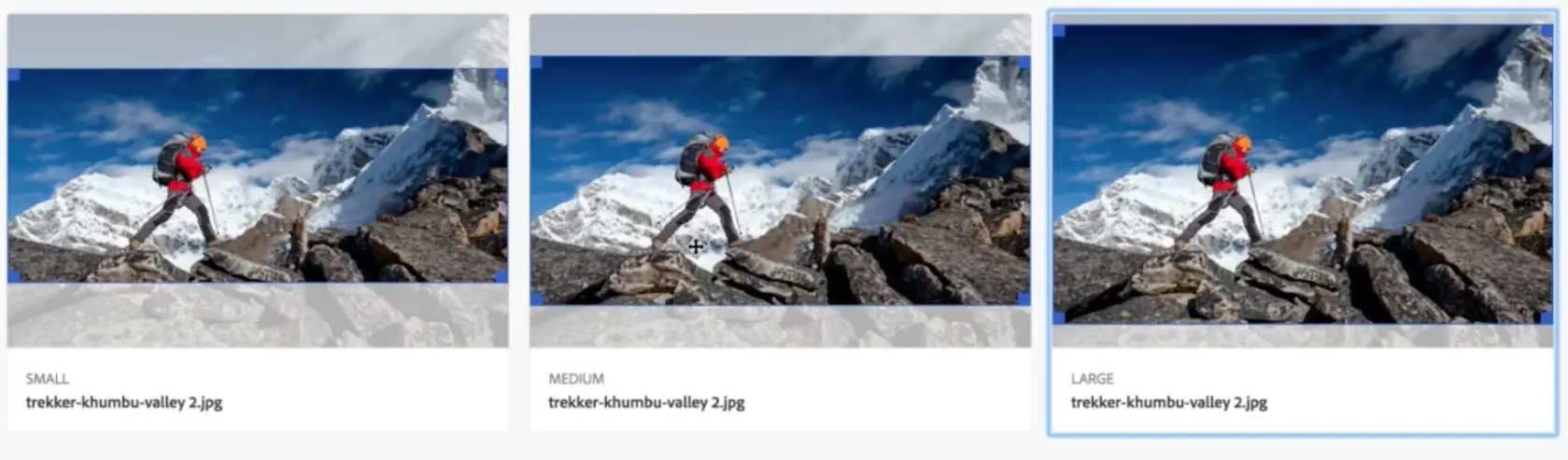

- Focal Point Cropping

If you are asking the image transformation service to crop an image to a certain width and heightand provide these paramters as pixel value via the API, you have to somehow tell the engine where to start counting pixels to set the cropping dimensions. Do you want it to start from the top-left, to-right, bottom-left, etc. or something else? In many cases, you won’t even know how the image you need to be cropped looks like. This is where focal point cropping comes in.

You would either provide the x and y coordinates for the focal point (the main are of interest that you want to keep in the center of your image, even after cropping) or an AI model that uses object detection knows by itself where the main object of interest is in the image. In this case the target dimension are going to be applied around the main object, detected by the AI.

- Face detection

This is a special one and often gets used wrong or misunderstood. Face detection is a subclass of object detection, with special objects that are human faces. Face recognition however is different. It’s about comparing detected faces in an image or video with a reference face and based on the result (match or mismatch) it performs an action. In the simpliest case it tags the detected and recognized face with a name tag.

Face detection is simpler and is all about deteting special objetcs, i.e faces in an image and also perform some action based on it. One use case could be to crop the image according to a set of dimensions, but leaving the detected face(s) untouch and crop around them.

- Brand detetcion

Also a special case in the realm of object detection. This one is about detecting brand logos or read brand names using OCR technology. By identifying logos in user-generated content, this technology helps monitoring the brand, ensuring compliance with brand guidelines and protecting brand integrity.

- Background Removal

Automated background removal was and still is one of the more popular functions, along with automated keyword tagging I’d say.

Background removal uses a computer vision technique called semantic segmentation. Compared to objet detection, segmentation is an algorithm that identifies objects in an image on a per-pixel basis with a sharp edge. It uses muli-layered neural networks (trained with an extensive dataset to cover any and all edge-cases ) to process each pixel and classify it as part of the foreground or background.

What makes this functionality so popular is its use case and the fact that it became a game changer in the e-commerce world.

Automatically transforming e.g. product shots into assest with a transparent background in a matter of seconds eliminates any fiddling with tedious image editing software or outsourcing the task to photo retouchers. Professional-grade background removal can be achieved as one step in a fully automated process, saving valuable time and resources.

- Generative Fill

When Adobe first announce this feature in a new version of Photoshop it seemed like a revolution and a real-life use case for generative AI. Only months later image optimization and dynamic media sasset platforms started to make use of it and provded an API interface to ‘invent’ pixels in an image that didn’t exist yet, but make sense if one understands the image content.

The way it works is, you provide a target dimension for the new image and instruct the conversion engine to generate new pixels in the areas that don’t exist yet. It may look like this:

https://dynamic.delivery-platform.com/media/images/assetname.jpg?h=1200&fill=gen&format=auto&compression=auto

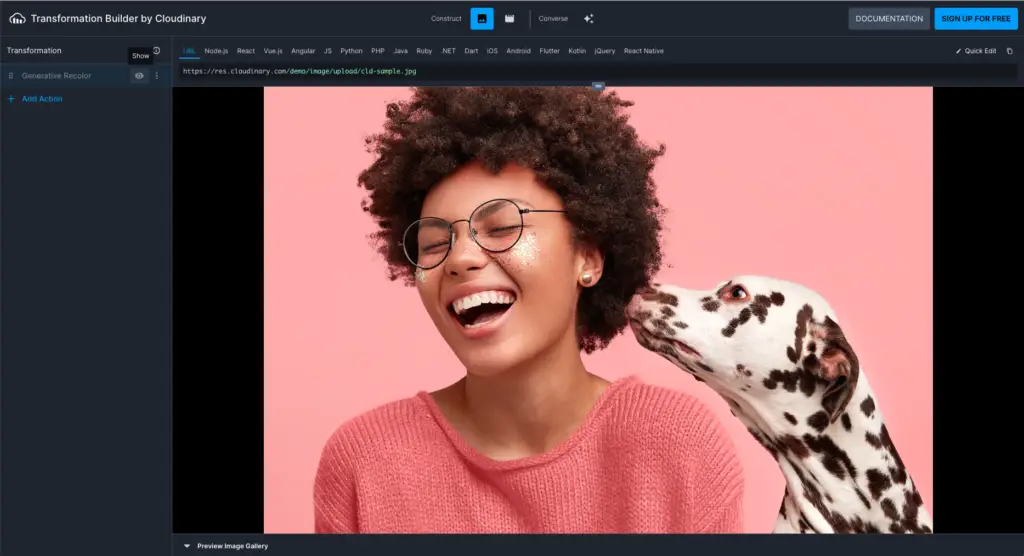

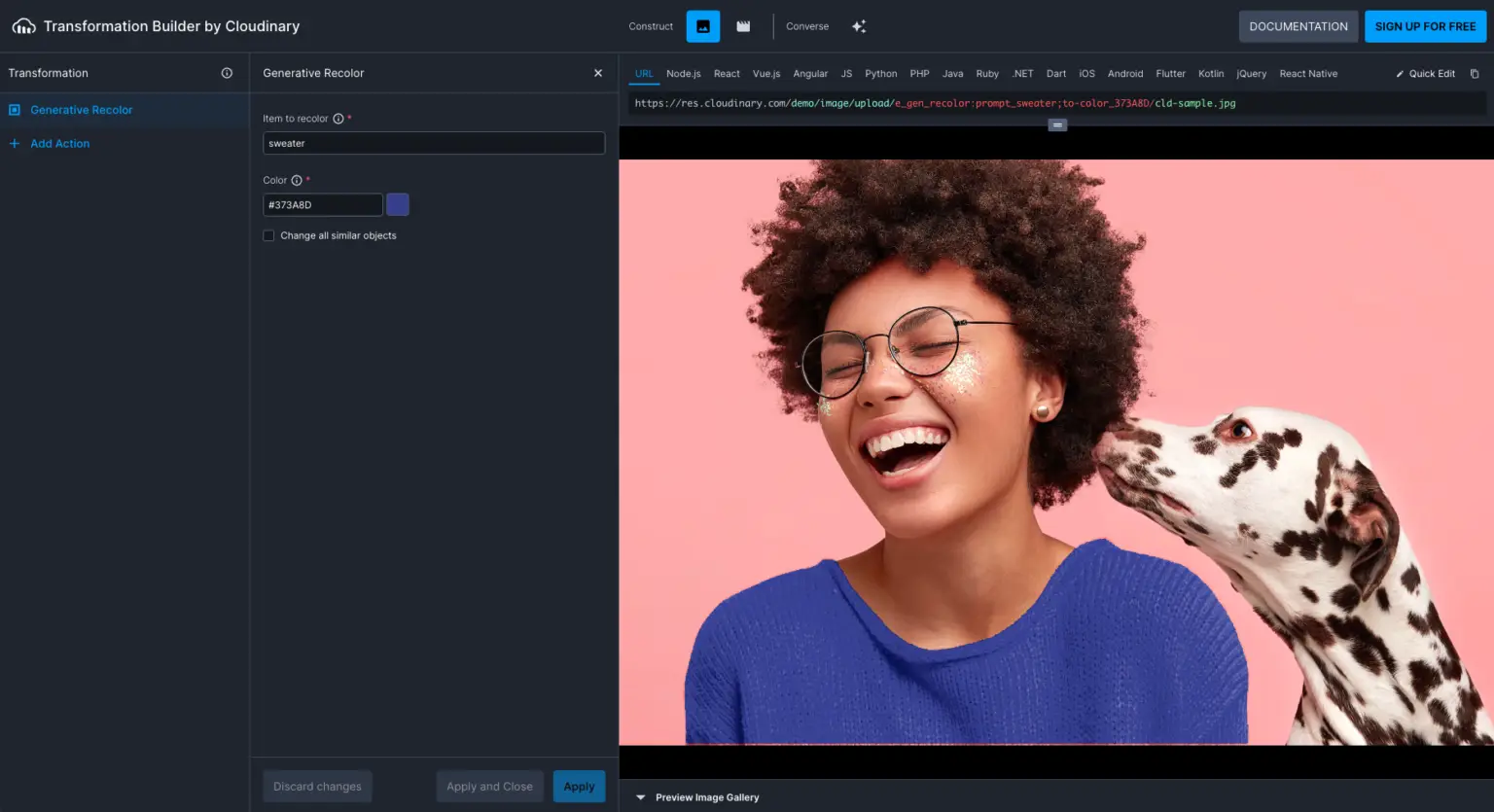

- Replace color

This function is still rather experimental, but when I came across it, it blew my mind and I could immediately see the benefit it provides in high volume e-commerce product photography workflows.

What makes this feature really outstanding in my mind is that fact that you’ll instruct the manipulating engine to recolor a very specific object in the image, through human language., e.g. ‘the t-shirt’, ‘the hat’, etc. So the engine understands human language using a NLP, it knows the location of the exact pixels of that object, using segmentation.

In high volume fashion product photography, this could save valuable time by shooting a piece once on model and additional colors get generated automatically by using workflow automation and AI-image manipulation.

The command used for the sample below is:

https://dynamic.delivery-platform.com/media/images/assetname.jpg?e_gen_recolor:prompt_sweater;to-color_373A8D/

- Remove object, replace object

At this point in time I would say these generative ai models are in ‘the ‘experimental’ category. It is very impressive to see what’s technically possible on demo sites, but it’s very obvious to see what doesn’t work today, as well. More than the technical doability, which is impressive, don’t get me wrong, I don’t see a good use case for these models honestly. The object that can be removed or replaced is very specific to each and every image, which makes me wonder how the manipulation flags could be used in an automated way.

With generative fill for example I can see how e.g. all images pushed out from a DAM to a Dynamic Transformation platform, could be changed in dimension and elived in a square format to the e-com site. In this case I can see how an automation pipeline could be configured to, say, crop or fill depending on the input format (landscape of portrait ) and deliver images that are creopped around the main subject of the image (smart cropping) or are filled with pixels that would be missing otherwise.

https://dynamic.delivery-platform.com/media/images/assetname.jpg?removeprompt:phone

Summary and potential

There is great potential for these dynamic asset delivery services, especially for transformations using AI models. If you consider that e-commerce sites are still a growing output channel for digital assets (images and video), you’ll understand that there is also a growing need for digital content production in general. What we’re seeing today is just the beginning, and new more powerful and resource-efficient AI models are being released on a weekly basis, so I have no doubt that we’re going to see immense progress in terms of new technologies for intelligent image and video transformation.

I truly believe that high-volume content production for e-commerce will be disrupted by the technology briefly described in this article.

Here is a list of Dynamic Media Delivery Solutions I have either worked with myself in a customer project or I have come across when researching are the following. Since some of the AI models used are open source or can be licenced at some cost, there are countless software services out there., that are not listed below.

- Scaleflex

- Cloudinary

- TwicPics

- Adobe

- Amplience

- Imgix